A Good recap of Transformers

A nice write up and brief essay on Transformers and the state the art.

Excerpt from an article by Diego Lopez Yse :

" https://www.pinecone.io/learn/transformers/

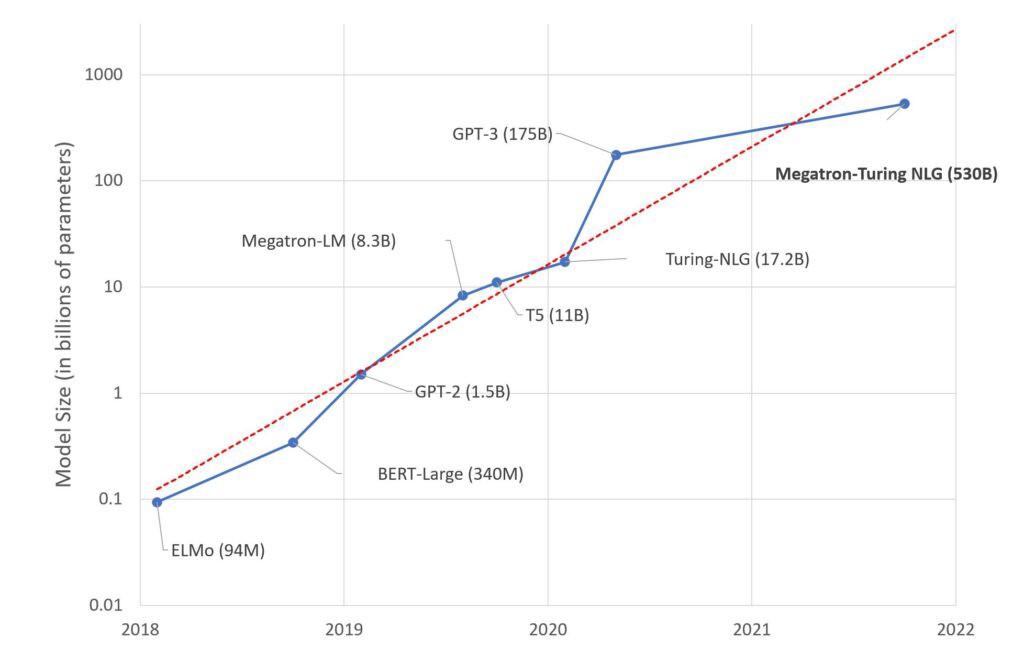

MEGATRON-TURING

You thought GPT-3 was big? A couple of months ago, Microsoft and Nvidia released the Megatron-Turing Natural Language Generation model (MT-NLG), which is more than triple the size of GPT-3 at 530 billion parameters.

As you can imagine, getting to 530 billion parameters required quite a lot of input data and just as much computing power. The algorithm was trained using an Nvidia supercomputer made up of 4,480 GPUs and an estimated cost of over $85 million.

This massive model is skilled at tasks like completion prediction, reading comprehension, common-sense reasoning, natural language inferences, and word sense disambiguation.

"

.